Welcome to the Club of Amsterdam Journal.

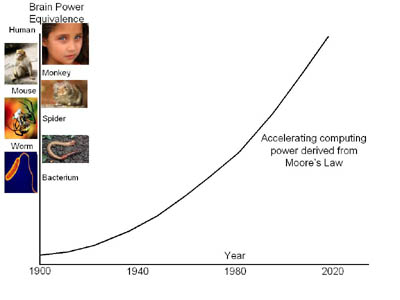

According to the Singularity Institute, (technological) singularity is the technological creation of smarter-than-human intelligence. So simple the definition may look like, it signals the end of 5 million year human evolution with the emergence of super intelligence as a result of exponential technological development. Although different technologies are also suggested, artificial intelligence and brain interfaces are thought to be the likely ones that would first match the level of acceleration necessary to provide such an enormous change. With approximately 100 billion neurons, human brain easily surpasses today’s computer forces. However, this does not change the fact that human brain has only tripled its capacity during the entire evolution while the computing benchmarks increase exponentially, doubling every one or two years. This information hypothetically provides us necessary variables to create a timetable for closing the distance of capacity between the human brain and the foreseen technologies. The arrival to such a threshold will create a loop, or an explosion like the big bang some would say (Ray Kurzweil), which, in turn, will make it possible for instance artificial intelligence to improve its own source code, automatically capsizing the superiority of human intelligence as we have known.

…. interested in knowing more and sharing thoughts and ideas…. join us at the event about the future of the Singularity – Thursday, 19 May!

Felix Bopp, editor-in-chief

A Measure of Machine Intelligence

By Peter Cochrane, Cochrane Associates UK

PROLOGUE

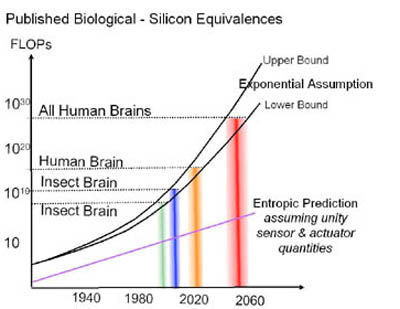

In 1997 Gary Kasparov and the world of chess was outraged when he was defeated by Deep Blue (IBM). This was a long awaited epoch; the time when man would be outclassed by machine in a game seen as intellectually superior and beyond the reach of a mere ‘clockwork’ mind. At the time sound-bites included: ‘something strange is going on; it didn’t play a regular game of chess; it didn’t play like a human; it didn’t play fair’ etc. [1]Interestingly, no one asked the most important question; how did Deep Blue win – what was the magic here? [2] The key was a new intelligence – a powerful computer that didn’t, or couldn’t, think like us. It brought something new – a new dimension, a new way of thinking and problem solving – and one devoid of emotion. That was the prime value – a new approach – and it is also the key contribution on offer from all Artificial Intelligence(s) (AI).AI was mired by controversy from the outset. [3] Unreasonable expectations, exaggerated claims, broken promises, and delayed deliverables are but a few damaging manifestations of a singular problem; a lack of understanding of complexity coupled with an inability to define and quantify intelligence. [4] To a large degree expectations were set by the depictions of Hollywood with humanoid intelligences comparable or exceeding ours. [5][6] Futurists have also been guilty of using questionable assumptions that led to graphs of the following form:

Fig 1: Typical prediction curve for machine intelligence

REALITY CHECK

Industry and commerce now depend upon AI for the control of engines, elevators, logistics, finance, banking, production robotics, and networks. [6] Surprisingly then, we still lack a complete understanding of what intelligence is, and how to quantify it, [7] and that HAL9000 (2001) conversation with a machine still seems a distant dream. [5]However we are constantly surprised by AI systems and the answers they contrive. On many occasions we lack the facility to fully understand, but that does not preclude us using the results! [8] Moreover, we have gradually realised that the solution of industrial, scientific and governmental problems will continue to defy human abilities, whilst AI will continue to improve as it evolves to embrace larger data sets and sensor networks. On the creativity front we have seen many of our key electronic and system inventions enhanced (or bettered) by AI, and in some quarters machine based contributions outweigh that of humans. [8] In fact the machine I am typing this article on has chips that owe more to machine design than any human contributor, and we might expect this disparity to expand further. [9][10]Our system of mathematics is a key limiter as it constrains our analysis and design of systems with large numbers of feedback/forward loops. [11] Machines know no such constraints and utilise designed and parasitic loops to advantage in a way we do not fully understand. It should come as no surprise then that we cannot fully describe and understand many key electronic elements, or indeed the non-laminar flow of fluids and gasses have to be modelled by machine.

FACILE DEBATE

So far the march of AI has transited several hotly contested stages, with a few more to go:1) Machines can’t think!

2) Machines will never be intelligent and creative!

3) Machines will never be self-aware!

4) Machines will never have imagination!

5) Machines will never hypothesise or conceptualise!

6) Machines will never be more intelligent than us!Such arguments seem to be born out of ignorance, fear, religious belief, limited imagination and vision, but not of scientific thinking. For sure (1 & 2) above have been surpassed, [9][10] whilst (3) is currently being contested and challenged by combinations of AI and sensors. To some extent (4 – 6) remain the ‘Holy Grail’ and seem to be prospects that upset far more people than (1 – 3). They also remain some way off, but are more likely to happen than they did 20 years ago! [12]An obvious question is; why should intelligence be sacrosanct and exclusive to carbon based life systems, and why should we and other animals be so special that only we can develop self-awareness and problem solving abilities including the creation of tools? A dispassionate analysis would seem likely to come down on the side of AI!

This situation almost mirrors medieval times when clerics and scholars (may have) debated [13] the number of angels on a pinhead! The fundamental problem is that we cannot describe, define, or quantify any of the fundamental aspects of the argument. [14][15] In short we have no meaningful measure of intelligence! So, a more pragmatic approach is to ignore all debate and get on with developing systems, observing their actions, and trying to understand the fundamentals – whilst periodically addressing the core question; what is intelligence, and can it be quantified?

THINKING DIFFERENT

There are two ‘wisdoms’ from my student days I periodically recall. The first came from an engineering academic with a long and distinguished industrial career. His words still ring in my ears: “Mr Cochrane, whilst it is acceptable for the mathematicians, physicists and theorists to declare that there is no solution, we in engineering enjoy no such luxury. We always have to find a solution”! The second came from a mathematician with years of industrial experience. Prophetically he said: “Before you even start a problem it is worth thinking what form the answer might be, and what would be reasonable”.For me the past 40 years has seen these maxims go from strength to strength as ‘simple-minded’ linear assumptions have given way to an increasingly complex and connected world where non-linearity dominates and chaotic behaviours are the norm. [16]

THE CHALLENGE

It was pure serendipity that a customer problem coincided with my efforts to establish some means of quantifying intelligence. The dilemma came in the form of an engineering challenge to compare AI systems contesting for deployment in an industrial application. Just how do you judge the efficacy of complex, and very large, AI systems?Fortunately, previous work on a similar problem had prompted the question; what would be involved in the quantification and comparison of ‘intelligent systems’ and what form might the answer take? So, I had also established that I was alone, without books or published papers offering any depth or glimmer of a solution. I was in new territory – with plenty of opinion and few facts or tried and tested methods offering any real value.At a modest estimate there are over 120 published definitions of intelligence penned by philosophers and theorists. [14][15] Unfortunately, none provide any real understanding or an iota of quantification. And the long established IQ measure by Alfred Binet (1904) is both a flawed and a singularly unhelpful idea in this instance. [17] The limitations of the approach were detailed by Binet, but ignored by those eager to apply the IQ concept in its full simplicity and meaningless authority! [18]A commonly used engineering comparison involves counting the number of processors and interconnections, and using the product as a single figure of merit. This is often combined with Moore’s Law projections to justify claims of exponential growth. [19] But this ‘product method’ seems far too simplistic to be meaningful and certainly does not reflect any notion of intelligence. In fact, machine intelligence estimates on this basis would suggest HAL9000 (2001) should be alive and well, but clearly he is not! [5]

A SUBSET OF WHAT DO WE KNOW FOR SURE

Like a lot of simple organisms our machines focus on limited sub-sets of problems and unlike us are not ‘general purpose’ survivalists. We (homo sapiens) appear fairly unique in the combination of our mental and physical abilities – bipedal with binocular vision, apposing digits, and very broad mental powers that facilitate language, communication, conceptualisation and imagination. Whilst other carbon species have far bigger brains, better sight and hearing (and other senses), they lack the critical combinations we posses, and so do all machines to date. [20]From an engineering and theoretical basis it seemed reasonable to start at a very simple level, to try and build a model that would be both applicable, give useful results, and might then expand to the general case. Leveraging the thoughts of others on ‘thinking, intelligence, and behaviour’ we can glean interesting pointers by considering:1) Slime moulds and jellyfish (et al) [21][22] exhibit intelligent behaviour without distinct memory or processor units. They have ‘directly wired’ input sensors and output actuators – ie they chemically sense, physically propagate and devour food. Of course a proviso is that we neglect the delay between sensing and reacting as a distinct memory function, and the sensor-actuator as a one-bit-processor on the basis of being so minimal as to be insignificant. This turns out to be a good engineering approximation based on the processing and memory capabilities of far more complex machines and organic systems.

Reproduced with the kind permission of Mike Johnston and Paul Morris

Fig 2: Slime mould & jellyfish exhibit intelligence without a brain2) In the main our machines have memory and processing maintained as distinct, entities – FLASH, RAM, HD, but this is seldom so in organic systems where there tend to be distributed and share functionality. [23] But again this assumption of separation suffices for the class of machines being considered.

Reproduced with the kind permission of Richard Greenhill and Hugo Elias

Fig 3: A modern robotic hand with separate sensors and actuator functions3) It turns out that whilst intelligent behaviour is possible without memory or processor, this is not true of simple sensors and actuators combined. This was recently writ large by a series of experiments on human subjects. Place a fully able human in an MRI scanner, ask then to close their eyes and imagine they are playing tennis, and their brain lights up. Now repeat that experiment with comatosed patients of many years and the same result is often evident! [24][25]So these poor victims have an input mechanism that is functional, but no means of communicating with the outside world. To the casual observer, and until very recently to the medical profession, they have appeared brain dead, mere inanimate entities, living and breathing, but non-functional!4) Colonies of relatively incapable entities such as ants, termites, bees and wasps poses a ‘hive/swarm intelligence’ that is extremely adaptive, and capable of complex behaviours. [25] Moreover, whilst the ‘rules of engagement’ of the individuals might be easy to define, the collective outcome is not! Another key feature is the part played by evolution over millennia, and the honing to become fit for purpose. Unlike us, Mother Nature optimises nothing and concentrates on fit for purpose solutions. This makes her systems extremely resilient with a high percentage of survivability overall as she is also impartial to the loss of a colony or indeed a complete species no longer suited to a changing environment. [26]

Reproduced with the kind permission of Humanrobo

Fig 4: Designed and optimised for a single purpose5) Our final observation is that all forms of intelligence encountered to date invoke state changes in their environment. A comparison of such change can be an expansion or compression of the quantity of the information or state. For example; the answer to the question ‘why is the sky blue?’ would contain a far more words and perhaps some diagrams, whilst the reply to ‘do we know why the sky is blue’ would be a simple yes!

KEY ASSUMPTION & DEFINITIONS

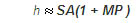

Based upon we actually know it seems entirely reasonable to assume an entropic measure to account for the reduction or increase in the system state, before and after, the application of intelligence. [27] We therefore define a measure of comparative intelligence as:

(1)

Applied Intelligence = The Change in Entropy = Ia = MOD{Ei -Eo}

Where

Ei = The input or starting entropy

Eo = The output or completion entropy

We take the Modulus value here as we are using the ‘state change’ as our measure

Entropy = E = The amount of information to exactly define systems state And for the purpose of an efficacy measure we include the ‘time to complete’ component in the form of machine cycles (N) or FLOPs (Floating Operations): (2)

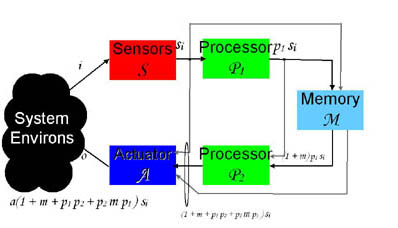

For the purpose of modelling we adopt a simple system representative of engineering reality, and Fig 5 shows the relationship between Sensor (S ), Actuator (A ), Processor (P ) & Memory (M). Here it is assumed that sound, light, vibration/movement or chemicals activate the sensor and the signal si is fed to the actuator, processor and memory. The processed output of each is then fed to the actuator. We note that in many biological systems other loops feed signals back to the sensor – typically to adjust the sensitivity, or in anticipation of the actuator response, or indeed a memorized event sequence – in our case the eyes are a prime example where we continually adjust their sensitivity. However, for our immediate purpose, and the sake of clarity, it is easier to leave this aspect out of the analysis. The sum of all the processed signals results in an output from the actuator (sound, light, movement or chemicals) that influences/changes the environment. And so the ‘looped process’ continues. To help visualize this consider a robot picking up and disposing of a plastic cup or playing a game of chess by physically moving the pieces. All movement would be iterative and determined by the perceived incremental scene – a moment and movement at a time. At this point it is worth noting than numerous configurations of simpler and more complex kinds are possible. Many of these have been modelled, including multiple sensors and actuators, distributed processing and memory, with far more feedback and feed-forward loops. All have resulted in very similar outcomes.

Fig 5: Assumed System Configuration(3)

We can now derive a transfer function of the form: h = a(1 + m + p1 p2 + p2 m p1 ) s(4)

By consolidating the weighted memory and processing elements as opposed to their complex operators, this further reduces to: h = SA(1 + M[ 1+ P ] + P )(5)

Now, taking (by orders of magnitude) the dominant terms:

ENGINEERING LICENCE

In the general case it is impossible to define the complex nature of the operations performed by S, A, P, M. All we can say is that they change the state of information and action according to the complex operators s(t), a(t), p(t), m(t) in sympathy with the clock cycle of the machine. In very specific situations these states can be described, but in general they cannot. For the purpose of creating a comparative intelligence measure we thus skirt this limitation by applying ‘weighting values’ denoted as: S = Sensor, A = Actuator, P = Processor, M = Memory.

ENTROPY RULES!

Using entropic change (1-2) as the defining property of intelligence, and the dominant terms, a reasonably general formula results from our analysis: (6)

(7)

Whilst the relative intelligence is given as:

From (7) we can now confirm two essential properties by inspection:6) With zero processor and/or memory power intelligence is still possible

7) With zero sensor and/or actuator power intelligence is impossibleThis is entirely consistent with our (organic) experience and experimental findings [24][25]. And further, it flies in the face of the conventional wisdom of those that worry about ‘The Singularity’ – the point at which machines take over because they outsmart us. [19][28] They assume that intelligence is growing exponentially by way of the product PM and Moore’s Law, [29][30] whilst (7) shows it is logarithmic.

Fig 6: A spread of published (P.M ) based predictions v our logarithmic modelSo, if we see 1,000-fold increase in the product of Processing and Memory (P.M product) intelligence increases by a factor of only 10. Hence a full 1,000,000 increase sees intelligence grow by a just 20-fold. This is far slower than previously assumed and goes some way to explain the widening gap between prediction and reality!A further important observation is that sensors and actuators have largely been neglected as components of intelligence to date, but it is seems (7) they play a key part in the fundamental intelligence of anything! Without them there can be no ‘evident’ intelligence.

MORE THAN A LEAP OF FAITH

If we make a couple of ‘big’ assumptions to further approximate the intelligence formula we can make some further interesting observations. We start by assuming that:PM >> 1 and AS PM >> 1(8)

Equation (7) then becomes: If we now observe that the progress of Actuators, Sensors, Processing Power and Memory technology is exponential with time ~ eat, est, ept and emt, then the growth in intelligence derived from equation (8) looks like this:

(9) Intelligence Rate of Growth ~ k.a.s.p.t This (9) implies that machine intelligence is growing linearly with time. So the obvious question is; what happens when a large number of intelligent machines are networked? If there are sufficient, and their numbers grow exponentially, then, and only then, will we see an exponential growth in intelligence.

FINAL THOUGHTS

What does all this mean? With the arrival of low cost sensors and their rapid deployment on the periphery of networks, and robotics, we are really much closer to achieving truly intelligent entities than ever before. Couple this with the creation of addressable databases and learning systems, then the opportunity for ‘intelligent outcomes’ is racing ahead. But for singular machines, it is a ‘logarithmic or linear race’ and not exponential! Only if we network vast and exponentially growing numbers of machines will we see the previously assumed (and feared) exponential intelligence outcome.Biological hardware and software is adaptable and evolves by mutation, and our machines can now do that too! But, biological systems are ‘born’ into a supporting ecology and the process has very definitely been from the simplest to the most complex over millennia. Our machines on the other hand are being born into an ecology that is being built top down in a few decades! Will this work as a complete support system? We don’t know – yet!We see ‘life’ exhibiting emergent and adaptable behaviours ‘fitting into a mature world’ and competing for survival. Our systems are mostly designed to be task specific with an assured place in the pecking order. At this time we do not fully understand the implications for machine intelligence, but it is clear that it is important, and we are beyond the ‘genesis point’ with machines designing (in part and in full) other machines. In the next phase they will also be interacting with their biological counterparts and learning from them.So far professionals have argued about what is and is not intelligent, and the analysis presented goes some way to provide a reasonably quantified judgement. Leaving aside all other issues and arguments it would appear that the arrival of a more general purpose intelligence is only a matter of when, and not what if. And there is only one questions left to ask; will we be smart enough to recognise a new intelligence when it spontaneously erupts on the internet or within some complex system?

Next Event

the future of the Singularity

Thursday, May 19 , 2011

Registration: 18:30-19:00, Conference: 19:00-21:15

Location: HTIB, 1e Weteringplantsoen 2c, 1017 SJ Amsterdam

The speakers and topics are

Frank Theys, philosopher, filmmaker and visual artist

Changed attitudes on ‘human enhancement’ since the turn of the millennium.

Yuri van Geest, Head of Emerging Technologies, THNK, the Amsterdam School of Creative Leadership.

Singularity – The Biggest Tech Wave is Here

Arjen Kamphuis, Co-founder, CTO, Gendo

The Singularity – Fantasy, threat or opportunity?

Moderated by Peter van Gorsel, Educational Business Developer, University of Amsterdam / UvA/HvA

Aquarius – undersea research laboratory

(Credit: NOAA) Aquarius is an underwater ocean laboratory located in the Florida Keys National Marine Sanctuary. The laboratory is deployed three and half miles offshore, at a depth of 60 feet, next to spectacular coral reefs. Scientists live in Aquarius during ten–day missions using saturation diving to study and explore our coastal ocean. Aquarius is the world’s only operating undersea research laboratory. Aquarius is owned by NOAA and is operated by the University of North Carolina Wilmington.

Since 1993, the Aquarius undersea lab has supported over 90 missions, producing some 300 peer-reviewed scientific publications along with numerous popular science articles, educational programs, and television spots. The Aquarius Reef Base is also supporting one of the longest running and detailed coral reef monitoring programs in the world, an ocean observing platform, and surface-based research in the Florida Keys National Marine Sanctuary.

In Science

- Started in 1994, scientists have established a coral reef and fish monitoring and assessment program to investigate change in the Florida Keys Marine Sanctuary and better understand the impacts of climate change, human influences, natural variability, and the effectiveness of marine reserves. Early results show the better condition of deep reefs as compared to those in shallow water. Continued studies will provide managers vital information in their efforts to protect Florida’s valuable reefs through ecosystem-based management and restoration strategies.

- Discovery of internal waves regularly impinging on reefs, bringing a 10 to 40-fold increase in natural nutrients, which is much greater than previously published estimates from nearshore pollution sources.

- The first descriptions and identification of a disease pathogen that devastated large hard corals in the Florida Keys, including important information about the distribution, abundance and potential causes of black-band disease. In another study a conclusive link to terrestrial runoff was discovered in a disease that killed large numbers of an ecologically important coral species throughout the Keys and Caribbean.

- New understanding of the magnitude and importance of sponge filtering on a coral reef; findings show it to be 10 times greater than measured in a laboratory setting and having an important influence on water quality.

- Used undersea drilling techniques to improve our understanding of the history of reef growth in Florida.

- Discovered the importance of water flow in coral feeding and have provided surface support for long-term coral spawning studies.

- Improved our understanding of herbivore interaction and influence on the coral reef ecosystem, this is important as fish and algae populations change on reefs worldwide.

- Testing of a new underwater spectrophotometer and some of the first underwater measurements made of UV light.

- Creation of a linked ocean observing system consisting of buoy, seafloor, and water column sensors providing real-time data (temperature, salinity, waves, currents, fluorescence and more) to scientists and the public via the Internet.

In Safety and Technology

- Excellent safety record; regarded as a model for standards and training by Navy saturation diving program.

- Since Aquarius operations began in Florida in 1993, technology has continually been upgraded and remains state-of-the art in saturation diving for ocean science.

- Barge tending the habitat was replaced with high-tech Life Support Buoy (LSB) and mission control relocated to shore base.

- Communications system upgraded to allow broadcast quality video and audio feed from Aquarius and nearby work sites to shore, winning a national business award. Internet access and video conferencing made available to Aquarius.

- Addition of umbilical diving capabilities with communications and helmet cameras.

- Robotic undersea vehicle piloting capabilities from inside the habitat.

- Upgraded to diver-controlled tank fills at waystations positioned up to 1000 feet away from Aquarius.

- Ocean observing platform established with connection to shore and Internet, sensors powered by wind generator and solar panels; only ocean observing site with adjacent seafloor lab and in situ service for over 280 days per year.

- Partnership with NASA to train astronauts and develop technology for lunar exploration, communications, and base construction.

- Partnership with Navy to train divers and develop technology for future saturation systems.

In Education and Outreach

- Hundreds of graduate and undergraduate students have assisted in research during Aquarius Reef Base missions and surface diving operations.

- Web site established and upgraded, including live web cams, new design underway, receives about 50,000 visits per mission.

- Two Jason Project missions, reaching over 1,000,000 students in the U.S. and across the world with live broadcasts, specially designed curriculum, and enabling student aquanauts to visit the undersea lab.

- Television programming on National Geographic, Discovery, ABC News 20/20, NBC Today Show, CNN, Learning Channel.

- Public-oriented articles in Scientific American, Weekly Reader, National Geographic, Smithsonian, Geotimes and more.

- Numerous presentations done at national events, aquariums, schools, science centers and museums; some with live links to aquanauts living underwater.

- Student writing contest, scouting programs, summer internships

In Partnerships

100s of partnering institutions since 1993 including:

- NOAA: Atlantic Oceanographic and Meteorological Laboratory, National Marine Sanctuary Program, and NOAA Southeast Fisheries

- Other federal programs: NASA, Navy, USGS

- Academic institutions: In Florida (UM Rosenstiel School of Marine and Atmospheric Science, NOVA Southeast U., FAU FSU, FIO, FIT, HBOI, University of Tampa, USF), North Carolina (UNC Chapel Hill), and nationally (Virginia Institute of Marine Science, Stanford University,, Scripps Institution of Oceanography, Georgia Institute of Technology, SUNY-Buffalo, CSU, University of South Carolina, WHOI, UC – Berkley)

- Private foundations – Living Oceans Foundation, Emerson Foundation, National Marine Sanctuary Foundation, The Nature Conservancy

- Industry – Harris Corporation, Motorola, United Space Alliance

Club of Amsterdam blog

Club of Amsterdam blog

http://clubofamsterdam.blogspot.com

March 24: Socratic Innovation

January 1: On the meaning of words

November 30: The happy organisation – a deontological theory of happiness

November 26: Utilitarianism for a broken future.

News about the Future

Projekt Zukunft (Project Future) — a Berlin Senate Initiative

Projekt Zukunft is an initiative for structural change in Berlin as an information and knowledge society. The goal is to develop the city into an internationally recognized, competitve and attractive location. Projekt Zukunft supports the up-and-coming branches of the media, IT and creative industries, interlinking them with scientific, political and management structures. Through strategies, initiatives and projects, public-private-partnerships, events, information campaigns and publications, Projekt Zukunft supports technological, economic and societal innovations, and enhances the general conditions of the city’s growth areas. Projekt Zukunft’s broad service portfolio makes it the city’s largest communications and support network.The initiative is financed through funding from the state of Berlin and the EC (European Regional Development Fund) as well as from contributions from the network partners, such as other administrative departments, institutions and enterprises.

Earth Plastic

Earth Plastic is a breakthrough material that is biodegradable, recyclable and made from 100% post-consumer recycled plastic. Unlike traditional plastics which never biodegrade, Earth Plastic contains a proprietary blend of additives that enables microorganisms to break down the molecular structure of the plastic into humus-like material that is not harmful to the environment.

Products made with Earth Plastic material have the identical look, durability, shelf-life and function that you’d expect from a traditional plastic product, but are also earth-friendly since they are biodegradable and recyclable.

EU Strategy for equality between women and men 2010-2015

The Strategy for equality between women and men (2010-2015) was adopted on 21 September 2010.

Inequalities between women and men violate basic Human Rights. They also impose a heavy toll on the economy and represent an underutilisation of talent. Economic and business benefits can be gained from enhancing gender equality.

The Strategy for the period 2010-2015 builds on the experience of the Roadmap for equality between women and men (2006-2010). It is a comprehensive framework committing the Commission to promote gender equality into all its policies.

The strategy highlights the contribution of gender equality to economic growth and sustainable development, and support the implementation of the gender equality dimension in the Europe 2020 Strategy.

The thematic priorities are based around the “Women’s Charter”:

- equal economic independence for women and men;

- equal pay for work of equal value;

- equality in decision-making;

- dignity, integrity and ending gender violence;

- promoting gender equality beyond the EU

- horizontal issues (gender roles, legislation and governance tools).

It follows the dual approach of gender mainstreaming and specific measures. For each priority area, key actions and detailed proposals for change and progress are described in an annex to the communication.

The new Strategy will aim at strengthening cooperation between the various actors and improving governance. It will lay the ground for future cooperation on gender equality with Member States, as well as with the European Parliament and other institutions and bodies, including the European Institute for Gender Equality.

Eurobarometer survey: Gender equality in the EU in 2009 .

A survey on perceptions and experiences of Europeans with regard to gender equality was conducted between September and October 2009. The results were published in March 2010.

62 % of the respondents believe that gender inequality is a widespread phenomenon. However, their perception of the extent of gender inequality increases with age: the young generation (15-24) tends to regard gender inequality as less widespread than the older age group (55+).

Violence against women and the gender pay gap are considered as the two top priorities for action selected by Europeans from a list of options: 62 % for violence against women and 50 % for the gender pay gap, and a large majority of Europeans think these issues should be addressed urgently (92 % for violence against women and 82 % for the gender pay gap).

Most of the respondents think that decisions about gender equality should be made jointly within the European Union, 64 % believe there has been progress in the past decade and over half are aware of the EU’s activities to combat gender inequalities.

Recommended Book

Human Enhancement

By Julian Savulescu, Nick Bostrom

To what extent should we use technology to try to make better human beings? Because of the remarkable advances in biomedical science, we must now find an answer to this question.

Human enhancement aims to increase human capacities above normal levels. Many forms of human enhancement are already in use. Many students and academics take cognition enhancing drugs to get a competitive edge. Some top athletes boost their performance with legal and illegal substances. Many an office worker begins each day with a dose of caffeine. This is only the beginning. As science and technology advance further, it will become increasingly possible to enhance basic human capacities to increase or modulate cognition, mood, personality, and physical performance, and to control the biological processes underlying normal aging. Some have suggested that such advances would take us beyond the bounds of human nature.

These trends, and these dramatic prospects, raise profound ethical questions. They have generated intense public debate and have become a central topic of discussion within practical ethics. Should we side with bioconservatives, and forgo the use of any biomedical interventions aimed at enhancing human capacities? Should we side with transhumanists and embrace the new opportunities? Or should we perhaps plot some middle course?

Human Enhancement presents the latest moves in this crucial debate: original contributions from many of the world’s leading ethicists and moral thinkers, representing a wide range of perspectives, advocates and sceptics, enthusiasts and moderates. These are the arguments that will determine how humanity develops in the near future.

Creative Company Conference

A chance to show how much you really like the future

Creative Company Conference near Amsterdam invites you to spend a day surrounded by leading US, European, and Dutch creatives. We really mean ‘surrounded’: On our CCC Experience Floor top speakers will give workshops, trainings and meet you and your business challenges in person. Highlights include Silicon Valley expert and best-selling author Sara Lacy (Tech Crunch), Stanford University, Beijing Design, Van den Ende & Deitmers Venture Capital, Local Intelligence Berlin and Lost Boys. What’s your name? Add it to CCC 2011!

Futurist Portrait: Michael Anissimov

“Hello and welcome. I’m Michael Anissimov, a science/technology writer and consultant living in San Francisco. I have a deep interest in the future of science and technology, and see technology as creating as the board on which social and political games are played.

There are two particular technologies which will have a radical destabilizing effect on the globe once they pass certain progress thresholds – molecular nanotechnology (MNT) and Artificial General Intelligence (AGI). These technologies are likely to be deployed sometime between 2020 and 2040, and if we don’t handle them with the utmost caution, they could cause disaster.

Accordingly, much of the material you will find on this site is devoted to presenting evidence in favor of this view, and encouraging the reader to get involved in the ongoing ethics dialogue or even active support of research. I’m interested in many other topics, like arms control and foreign policy, but my focus on MNT and AGI is what distinguishes me from most people you’ll run across. Like so many other techie hipsters nowadays, my personal philosophy is transhumanist.

My background: in 2002, when I was a senior in High School, I co-founded the Immortality Institute, a life extension organization that today includes hundreds of paying members and an active online community. In 2006 I founded the Accelerating Future blog, which gets over 70,000 unique visitors per month and has been featured on G4TV, SciFi.com, and the front page of Digg and Reddit. I’m currently Media Director for the Singularity Institute for Artificial Intelligence, Fundraising Director, North America for the Lifeboat Foundation, and am involved in the Center for Responsible Nanotechnology. I’ve given talks on futurist issues at seminars and conferences in Los Angeles, Las Vegas, San Francisco, Palo Alto, and at Yale University.”

Technological Singularity

The Singularity and its Importance

Agenda

| Our Season Program 2010/2011 | |||

| May 19, 2011 18:30 – 21:15 June 23, 2011 18:30 – 21:15 | | the future of Singularity Location: HTIB, 1e Weteringplantsoen 2c, 1017 SJ Amsterdam  the future of European Democracy Location: Nautiek.com, Veemkade 267, 1019 CZ Amsterdam (Ship SALVE) Past Season Events 2010/2011 | |

Oct 14, 2010:the future of Hacking Nov 25, 2010:the future of Happiness Jan 20, 2011: the future of Financial Infrastructure Feb 17, 2011: the future of Services March 17, 2011: the future of Shell April 14, 2011: the future of the Human Mind |  |

Customer Reviews

Thanks for submitting your comment!