| Welcome to the Club of Amsterdam Journal. Biosensing is the conversion of biological processes into useful information. Incorporating “a variety of means, including electrical, electronic, and photonic devices; biological materials (e.g., tissue, enzymes, nucleic acids, etc.) and chemical analysis” biosensing produces signals to detect biological elements, using related technologies to convert these signals into readable data. From biomedicine to food production, environment to security and defense, biosensing addresses a rapidly growing industry in this field. What is more, the Netherlands is home to a number of scientists who are currently working on a number of biosensors, promising to come up with groundbreaking new technologies in all. For the “Future of Biosensing” a few of these scientists are going to share some insights of their work to describe how our future might be effected as a result of these developments. Join our next event – share your thoughts February 11 the future of Biosensing Felix Bopp, editor-in-chief |

Emotional Cartography

By Christian Nold, artist, designer and educator

from the book Emotional Cartography – Technologies of the Self

This book is a collection of essays from artists, psychogeographers, designers, cultural researchers, futurologists and neuroscientists, brought together to explore the political, social and cultural implications of visualising people’s intimate biometric data and emotions using technology. The book is the outcome of a research process which aimed to reach a deeper understanding of a project called ‘Bio Mapping’, which since 2004, has involved thousands of participants in over 16 different countries. Bio Mapping emerged as a critical reaction towards the currently dominant concept of pervasive technology, which aims for computer ‘intelligence’ to be integrated everywhere, including our everyday lives and even bodies. The Bio Mapping project investigates the implications of creating technologies that can record, visualise and share with each other our intimate body-states.

The Bio Mapping device: GPS – left, fingercuffs – top and data logger on the right. To practically explore this subject, I invented and built the Bio Mapping device, which is a portable and wearable tool recording data from two technologies: a simple biometric sensor measuring Galvanic Skin Response and a Global Positioning System (GPS). The bio-sensor, which is based on a lie-detector, measures changes in the sweat level of the wearers’ fingers. The assumption is that these changes are an indication of ‘emotional’ intensity. The GPS part of the device also allows us to record the geographical location of the wearer anywhere in the world and pinpoint where that person is when these ‘emotional’ changes occur. This data can then be visualised in geographical mapping software such as Google Earth. The result is that the wearer’s journey becomes viewable as a visual track on a map, whose height indicates the level of physiological arousal at that particular moment. The Bio Mapping tool is therefore a unique device linking together the personal and intimate with the outer space of satellites orbiting around the Earth. The device appears to offer the colossal possibility of being able to record a person’s emotional state anywhere in the world, in the form of an ‘Emotional Map’.

People who actually wore the device and tried it out while going for a walk and then saw their own personal emotion map visualised afterwards, were baffled and amazed. But their positive reactions hardly compared to the huge global newspaper and TV network attention that followed the launch of the project. People approached me with a bewildering array of commercial applications: estate agents in California wanting an insight into the geographical distribution of desire; car companies wanting to look at drivers’ stress, doctors trying to re-design their medical offices, as well as advertising agencies wanting to emotionally re-brand whole cities. Other emails arrived from academic sociologists, geographers, futurologists, economists, artists, architects and many urban planners, trying to get new mental insights into their own disciplines. Surprisingly, there were also intensely personal emails from people who wanted to understand their own body and mind in more detail, asking for a therapeutic device to monitor their daily anxiety levels.

I was shocked: my device, or more correctly, the idea or fantasy of my device had struck a particular 21st century zeitgeist. A huge range of people had imagined ways of applying the concept, some of which I felt uncomfortable about. I realised that ‘Mapping Emotions’ had become a meme that was not mine anymore, but one that I had merely borrowed temporarily from the global unconscious. Faced with some dramatic choices, I decided to try to establish and document my own vision of emotion mapping as a reflexive and participatory methodology.

From Device to Methodoligy

From talking with people who tried out the device, I was struck by their detailed and personal interpretations of their bio-data. Often we would sit next to each other and look at their track together. While I would see just a fairly random spiky trail, they saw an intimate document of their journey, and recounted events which encompassed the full breadth of life: precarious traffic crossings, encounters with friends, meeting people they fancied, or the nervousness of walking past the house of an ex-partner. Sometimes people who walked along the same path would have spikes at different points, with one commenting on the smells of rotting ships, while another being distracted by the CCTV cameras. People were using the Emotion Map as an embodied memory-trigger for recounting events that were personally significant for them. Sometimes these descriptions overlapped, while at other times they were unique. For them, the spikes were documenting not what we would commonly call ‘emotion’, but actually a variety of different sensations in relation to the external environment such as awareness, sensory perception and surprise. I suddenly saw the importance of people interpreting their own raw bio-data for themselves.

Bio Mapping functions as a total inversion of the lie-detector, which supposes that the body tells the truth, while we lie with our spoken words. With Bio Mapping, people’s interpretation and public discussion of their own data becomes the true and meaningful record of their experience. Talking about their body data in this way, they are generating a new type of knowledge combining ‘objective’ biometric data and geographical position, with the ‘subjective story’ as a new kind of psychogeography.

Participants often describe the sensation of using the Bio Mapping tool as a kind of Reality TV show, where they can see their own life documented in front of them. Such a description suggests something similar to Berthold Brecht’s notion of ‘Verfremdung’ (de-familiarisation). Brecht’s idea is that this performative distancing allows the viewer to take a critical distance on viewed events. In the case of Bio Mapping, the participants are vocalising their intimate internal mental life as well as public behaviour to a communal group. In effect, the participants are carrying out a type of co-storytelling with the technology, that allows them to creatively disclose, or omit, as much as they like of what happened during their walks. The Bio Mapping tool therefore acts as ‘performative technology’ which shoulders the burden of having to hold the public’s attention, while offering a safe distance from public exposure to the ‘interpreter’. Used in this way, the tool allows people who have never met each other to tell elaborate descriptions of their own experiences, as well their opinions on the local neighbourhood, in a way that they would have never done otherwise.

This vision of Bio Mapping as a performative tool which mediates relationships is very different to the fantasy of Emotion Mapping that many people approached me about: such as marketeers’ intentions to metaphorically ‘slice people’s heads open to see their innermost feelings and desires’.

With the passing of the time, I started to realise that both the particular context and ways in which a biometric sensor is used drammatically affects the social relationships that are formed, as well as the types of observations that people make during the workshop.

The early Bio Mapping workshops had all taken place in art galleries in the centres of towns and cities. People often walked randomly for 30 minutes before returning to the exhibition to see their emotion maps. In such context, the kind of descriptions and annotations that people left were mainly anecdotal: drank a coke here, had an ice cream there, was spooked by pigeons etc.

Once I started to work with local community organisations for longer periods of time and in less central towns areas, where people lived in and cared about (and not just worked or shopped), the annotations changed dramatically. Instead of being just about their momentary sensations in the space, participants told stories that intermingled their lives with the place, local history and politics. The discussions often followed a trajectory of noticing the bodily effect of car traffic on one person’s emotion map, often leading to discussing the lack of public space and identifying its social and poltical causes. This process of scaling-up and seeking connections between issues encouraged people to talk both personally and politically in a way they had often not done before with other local people.

At the end of each Bio Mapping workshops project, all the information and data gathered were designed into a printed map, which was then distributed for free in the locality. For example, in the Greenwich Emotion Map, this meant using a GIS (Geographical Information Systems) software to create a communal arousal surface which blended together 80 people’s arousal data and annotations. The resulting communal ‘emotion surface’ is a conceptual challenge and question. Can we really blend together our emotions and experiences to construct a totally shared vision of place?

You can the download the book Emotional Cartography as *.pdf

Christian Nold exhibits “Bio Mapping”

February 11at the future of Biosensing

Next Event

the future of Biosensing

Thursday, February 11, 2010

Registration: 18:30-19:00, Conference: 19:00-21:15

Location: Waag Society, Nieuwmarkt 4, 1012 CR Amsterdam

The speakers and topics are

Davide Iannuzzi, Associated Professor, VU University Amsterdam

Fiber-top micromachined devices: biosensors on the tip of a fiber

Robert Shepherd, Founder, Eduverse

Virtual worlds and biosensors

Bert Mik, Scientist and anesthesiologist, Erasmus Medical Center

Christian Nold, artist, designer and educator

“Bio Mapping” – an exhibition

Moderated by Iclal Akcay, Research Journalist

SixthSense

“Nothing can be and can not be one and at the same time and I am, I am Pranav Mistry.

Currently, I am a Research Assistant and PhD candidate at the MIT Media Lab. Before joining MIT I worked as a UX Researcher with Microsoft. I received my Master in Media Arts and Sciences from MIT and Master of Design from IIT Bombay. I have completed my bachelors degree in Computer Science and Engineering. Palanpur is my hometown, which is situated in northern Gujarat in India.

Exposure to fields like Design to Technology and from Art to Psychology gave me a quite nice/interesting viewpoint to the world. I love to see technology from design perspective and vice versa. This vision reflects in almost all of my projects and research work as well. in short, I do what I love and I love what I do. I am a ‘Desigineer’ 🙂 “

‘SixthSense’ is a wearable gestural interface that augments the physical world around us with digital information and lets us use natural hand gestures to interact with that information. By using a camera and a tiny projector mounted in a pendant like wearable device, ‘SixthSense’ sees what you see and visually augments any surfaces or objects we are interacting with. It projects information onto surfaces, walls, and physical objects around us, and lets us interact with the projected information through natural hand gestures, arm movements, or our interaction with the object itself. ‘SixthSense’ attempts to free information from its confines by seamlessly integrating it with reality, and thus making the entire world your computer.

Club of Amsterdam blog

News about the Future

Biochip gel keeps proteins in place

Biochips are most likely among the most important medical instruments that appeared over the past few years, on account of the fact that they can analyze samples looking for a variety of contaminants, and can provide rapid results. Made up of miniaturized test sites (microarrays) on a solid substrate, these chips could become even smaller in the future, and cheaper to mass-produce. And that day may not be so far out, researchers from the Fraunhofer Institute for Applied Polymer Research IAP, in Germany, reveal. They have created a gel that is able to make proteins feel “at home” on biochips.

Biochips carrying thousands of DNA fragments are widely used for examining genetic material. Experts would also like to have biochips on which proteins are anchored. This requires a gel layer which can now be produced industrially.

“We have developed a gel – a network of organic molecules – that we can apply to the surface of the biochip. This gel layer is only about 100 to 500 nano-meters thick and consists mainly of water. We thus make the protein believe that it is in a solution, even though it is chemically connected to the network. It feels as if it is in its natural environment and continues to function even though it is on a biochip,” IAP Group Manager Dr. Andreas Hollander explains.

Apparently, this is one of the most important things about such a device, especially if it’s one laden with proteins. The whole idea about proteins is that they have a 3D structure, which they use to interact with various molecules, or to control biological processes inside the human body. However, if they were to bind with the substrate on a biochip, they would lose their specific structure, and would become useless, or worse, destroy its own structure, tainting the results of the analysis.

HYDROFILL is the world’s first personal hydrogen station designed by Horizon. The small desktop device simply plugs into the AC, a solar panel or a small wind turbine, automatically extracts hydrogen from its water tank and stores it in a solid form in small refillable cartridges.

The cartridges contain metallic alloys that absorb hydrogen into their crystalline structure, and release it back at low pressures, removing concerns about storing hydrogen at high pressure. This storage method also creates the highest volumetric energy density of any form of hydrogen storage, even higher than liquid hydrogen. Unlike conventional batteries, these cartridges carry more energy capacity, are cheaper, and do not contain any environmentally-harmful heavy metals.

Recommended Book

Body Sensor Networks

By Guang-Zhong Yang (Editor), M. Yacoub (Foreword)

The last decade has seen a rapid surge of interest in new sensing and monitoring devices for healthcare and the use of wearable/wireless devices for clinical applications. One key development in this area is implantable in vivo monitoring and intervention devices. Several promising prototypes are emerging for managing patients with debilitating neurological disorders and for monitoring of patients with chronic cardiac diseases. Despite the technological developments of sensing and monitoring devices, issues related to system integration, sensor miniaturization, low-power sensor interface circuitry design, wireless telemetric links and signal processing have still to be investigated. Moreover, issues related to Quality of Service, security, multi-sensory data fusion, and decision support are active research topics.

This book addresses the issues of this rapidly changing field of wireless wearable and implantable sensors and discusses the latest technological developments and clinical applications of body-sensor networks.

How to make money from Emerging Technologies

By Tim Harper, Chairman, Cientifica

At Cientifica we have been working with emerging technologies for fifteen years, whether developing field emission displays in the mid 90’s, or advising governments, companies and the World Economic Forum in recent years. Over this period money has been made and lost in everything from medical devices to scientific instrumentation and carbon nanotubes, and this hands-on approach has left us with a wealth of practical experience.

As we approach the end of the first decade of a new millennium, science and technology are advancing faster than ever, with a wide range of new and emerging technologies ready to change the world and take investors for a ride.

As a sane and rational voice in a sea of hype, and one of the few companies whose clients have consistently been on the winning team in technology investment, we present a brief guide to making money out of emerging technologies for governments companies and investors.

Hands Up Who Swallowed the Hype?

Somewhere near the dawn of time, where men clad in animal skins hunted mammoths to feed their families, and those of us who hadn’t lost everything in the dot .com crash shivered in the dark, a bright scientist named Mike Roco was writing a plan that was to become The National Nanotechnology Initiative.

While much of the document was eminently sensible, especially given the fact that most people’s view of nanotechnology was, at the time, influenced by the idea of little robots, nanobots, rather than the rather mundane world of advanced materials, this really didn’t matter. What really caught the imagination was the headline A TRILLION DOLLAR MARKET BY 2015 – and that’s where the trouble started.

This wasn’t the first time that a new emerging technology had been touted as the answer to all of humanity’s ills, or investors’ prayers, and it won’t be the last time. From biotech in the 1970’s to synthetic biology now, we draw on Cientifica’s decades of investing expertise to offer a guide of how Governments, Companies and Investors can take advantage of emerging technologies without getting seduced by the hype.

Everyone, from Venture Capitalists, who had made a few million from the dot.com era, to entrepreneurs and scientists looked at the magic trillion-dollar number and thought “I want a piece of that!”

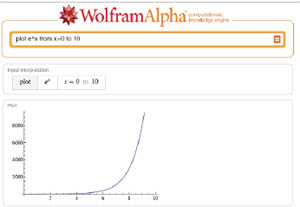

However, like almost every business plan we have seen, the plan contained a prediction that looked something like the graphic below, where the x axis goes from now to some point in the future, far enough away that it may be plausible, but no too near that it may be laughable.

This is, or course, the dreaded exponential curve, a much abused but little understood mathematical function. If we consider the x-axis to be time, and the y-axis to be sales, revenues, profits, return on an investment, ability to attract good-looking girls or any other measure that we want to market, then it looks quite attractive. What is less attractive is that while the last portion of the curve looks like a worthwhile investment, almost 70% of it looks to give very poor yields.

Unfortunately much of this was as a result of people not checking their facts. We still see business plans that are entirely based on a 2007 study of nanotechnology markets that predicts that some sector or other will suddenly start gobbling up huge chunks of nanotechnologies (showing exponential growth). We see revenues that suddenly increase by an order of magnitude in a year, with entrepreneurs calculating back from some fantastical figure just over the horizon rather than doing their own market research.

We’re now seeing similar wild predictions for synthetic biology, industrial biotech and a host of other areas – remember that wherever there is hope there is a market research firm ready to help you develop the kind of numbers that investors like to see.

But look at it this way, five years ago no one predicted Twitter, Facebook or the iPhone, so why would anyone attempt to build a business on a market predicted to start emerging in eight or nine years?

While there is money to be made from emerging technologies, the magnitude of the returns, and their nature differs widely between the various players. Governments want wider economic returns, while investors just want a positive return while minimising risk.This white paper examines three different strategies for reaping the benefits of nanotechnologies.

What Governments Should Do

We spent a lot of time over the last ten years asking various governments why they were setting up nanotechnology programs, and the answer was usually to remain competitive in a technologically based world. Behind the bluff was an element of ‘me too’ as many policy makers looked at the US National Nanotechnology Initiative and wondered whether they should try to muscle in on the action more effectively than they did with semiconductors or the Internet. The result was a glut of nanotechnology research programs that proved a bonanza for infrastructure and instrumentation companies. While the peak of the clean-tech mania seems to have passed, science has no shortage of other potential panaceas to throw at the world’s ills.

However, ten years on there are a number of challenges that governments are facing:

- Nanotechnology has been heavily funded for ten years, yet we still only see a handful of products and little in the way of the total transformation that was promised in the early days. This leads politicians to believe that funding can be diverted to other ‘hot’ areas such as clean tech where announcements of new programs can be more easily quantified in terms of swaying undecided votes in their direction.

- An increasing pressure to focus on grand challenges and applied science rather than pure knowledge, or impact-based research funding as is it increasingly known.

- Competition for funding – as more areas of technology emerge, whether synthetic biology, Clean Tech, Industrial Biotech etc., governments are faced with static budgets and increased competition due to Credit Crunch Economics. The danger is that existing programs die a death of a thousand cuts to placate more academic pressure groups with fewer resources, resulting in an under-funded and ineffective technology program a mile wide and an inch deep.

- Consumer Pressure Groups are increasingly anti technology. While some of their concerns are legitimate, many are not, and for policy makers who are not scientific experts, and few are, speculative concerns are given equal weighting with scientific results.

So what can Governments do to ensure a more effective transfer of technologies from the lab to the economy?

- Support basic research. While we know a lot more than we used to about nanotechnologies we are still at an early stage. It has taken biotech thirty years to get this far, and all emerging technologies will face a similar long haul. The more we understand about the basics, the easier it is to turn something that works in a lab into an industrial process. To achieve that, you really need to understand the science behind the phenomena, and that takes ten to fifteen years.

- Create more spin-outs. Easy to say, but how much time and effort is wasted by governments in supporting small technology-based businesses when very few of them actually exist. The usual bunch of professional project managers who haven’t moved technology forward one iota in ten years will suck up any government cash. A system of small, no strings attached grants for technology-based start-ups would encourage university spin-outs and support them through that difficult first year of product development. We are talking about tens of thousands of pounds, not millions.

- Address the issues of process and manufacturing. Many countries trumpet about a wide range of open access facilities, but how many of them actually do what is needed by business? The key to getting to market is moving a lab-based process to an industrial process, meaning not just scale up, but quality control and reproducibility. For a technology to be usable it needs to be a reproducible process with quality control to ensure that the result will be the same whether you produce a gram or a ton, and whether you do that this week, next week, or in ten years time. Leveraging existing expertise in pharmaceuticals and chemicals is an obvious place to start.

- Cut regulation for start-ups, don’t strangle them at birth. Not every start-up works, many don’t, but some do. It’s a numbers game but funding more start-ups to employ more people is surely better than funding people to be idle.

- Fire 90% of university tech transfer people and replace them with people who understand how small businesses and science based innovation actually works. We have spent months negotiating with some institutions that issue unreasonable

demands and detailed ten-year revenue projections when current economic conditions can change in ten minutes.

What Companies Should Do

Some companies have done very well out of nanotechnologies, while others have fared less well. The golden rule seems to be

Avoid nanomaterials at all costs (unless you are a large chemical company)

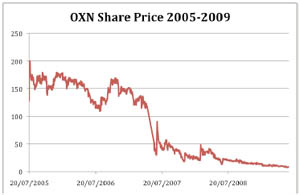

Oxonica, publicly quoted in the UK, is a classic illustration of the typical trajectory of a nanomaterials company. After five years of trying out different applications of nanomaterials, from phosphors to fuel additives, the company began to gain traction and managed to hold an initial public offering valuing the company at some £150 million. However, many of their products were generic, for example titania nanoparticles for sunscreens, and could not be protected and others were licensed getting the company bogged down in disputes about intellectual property and licence terms. With only a few products to sell, and required to find channels to market in areas as diverse as cosmetics, fuel additives and security, the company was unable to realise its early potential. Investors gradually lost patience and the company withdrew from London’s AIM market in 2009.

Despite this cautionary tale, many large companies who have heavily invested in carbon nanomaterials production, Mitsubishi Chemical, Bayer, Arkema and others seem to be still searching for a market. While large chemical companies can, and often do, take a fifteen or twenty year view, smaller companies have a tough time getting their investors to swallow more than a few years.

Even the largest companies have been under strain recently leading to four main challenges

- We built it (the plant) but they didn’t come – many companies have made the transition from lab-scale production to pilot plants, and the better funded among them have even built full-scale plants, but often the growth stops there, why?

The reason is often access to markets (see below). - Breaking out of the sales trap – emerging technologies can have diverse applications. While a large company such as BASF can add a few additional lines to its existing catalogues on paints, coatings, adhesives etc., as it already has a wellestablished presence, smaller companies often do not have the resources to develop each channel, relying instead in one or two large potential customers. But who would companies rather trust, a well-established multinational or a few guys who just spun out a company?

- High R&D costs in an era of falling budgets – the latest numbers show R&D spending holding up well despite the economic climate, but pressure is mounting, especially on longer-term R&D projects to show some evidence of success.

- Cost of litigation – Oxonica, Raymor, and Evident are just three of the nanotech companies that came close to failure in 2009 as a result of litigation. Smaller companies simply do not have the resources, either financial or human, to fight long energy sapping legal battles with larger competitors.

- Fear of backlash / litigation / class action – With any new material or process there is the possibility of unforeseen effects. It is almost impossible to determine the full lifecycle of any material, and in complex multivariate systems such as the environment there is always a possibility, no matter how remote, of unintended consequences.

So what can companies do to ensure a return on their investments thus far?

- Search for complementary technology to address market needs . one trick companies rarely succeed, and a large part of addressing market needs involves finding out what end users really want, whether master batches, dispersions or a more usable user interface.

- Avoid commoditising your core product – Simply producing a material puts you in direct competition with the bulk chemical industry. Companies that protect their product and add value through IP or trade secrets (how do you get it to stay dispersed?) can prevent erosion of margins.

- Take advantage of government R&D collaboration facilities – the last ten years has seen massive government investment in infrastructure with much of it having the aim of providing this to industry. Some academics are more receptive to industry than others, but as your taxes have already paid for it, use it!

- Create a product or process – magnetic storage was nothing new, but when Apple took advantage of the effect of giant magnetoresistance which made 2″ hard drives possible a whole new industry was born.The iPod was made possible by Nobel prize winning nanotechnology, but the technology was not developed by Apple even though they made all the money.

- Partner for channels to market – a company may have the technology but it probably won’t have the channels it needs to get it into the hands of the people who want it. As an example, big pharmaceutical companies own most of the medical sales channels, and it is often faster and cheaper in the long run to partner with big player provided that your technology doesn’t disrupt their business too much.

What Investors Should Do

How can you do appropriate due diligence on a technology that is so new that you have nothing to compare it to? You can’t, so investors need to look at more creative ways of funding emerging technologies.

Technology IPO’s are few and far between these days, and if businesses that are funded by a couple of guys with a couple of laptops that wind up reaching hundreds of millions of people have problems what is the situation like for a couple of guys who need a lab full of glassware and some pretty fancy instrumentation just to get to proof of concept? Dismal!

Many investors find it hard to make rational decisions when it comes to investing in emerging technologies because oftenthe technology is too new, and markets too diverse, but more importantly too far away from the normal everyday experience. (Policy makers have similar issues, in that they can grasp the importance of things that relate to their daily lives and tend to ignore the rest). In general, investing in emerging technologies is more like taking an option on the future than scoring a sure fire slam dunk home run and is fraught with danger, but there are steps investors can make to minimise risk.

- First and foremost ignore any reports citing exponential growth and do your own due diligence. Is the science feasible, is there anybody on the management team who would look credible to investors, how much does the company know about its target market and how do the know this? If all the market numbers come from someone else’s work then walk away.

- Invest for the long haul. That’s easy for multinationals and sovereign wealth funds to do, less easy for VCs and private equity and even trickier for private investors. Despite claims that we are accelerating towards a technological singularity it still takes ten to fifteen years from the emergence of a new discipline to making serious money.

- Don’t look at the basic technology but what it enables. Coming back to the iPod analogy again, you don’t have to invent a technology to take advantage of it. In the same way that the iPhone generated huge opportunities for people to develop applications running on the platform, thin film flexible photovoltaics will require all kinds of infrastructure from smart metering to storage. Leave the heavy lifting to the huge armour plated rhinos who can raise $100 million, and just be the bird that lives on the ticks.

- Look for revenue as well as exits. While an IPO or acquisition is the big pay off, loan notes, R-class stock or even consultancy fees are good ways to share in any growth on valuation and/or revenue.

- Finally, and importantly, don’t invest in something you don’t understand. If you can’t tell your proteins from your enzymes or your protons from your electrons either get someone to explain it to you or steer well clear of emerging technologies.

Conclusion

So does this make investment in emerging technologies a good or a bad idea? It depends who you are and why you are investing.

For Governments it should be a no-brainer. Countries such as South Korea, Taiwan and Singapore managed to build wealthy advanced economies as a direct result from investing in technology, and have the institutions in place to ensure that they can hold on to their position through nanotech, biotech and information technology. However, as the case of the United Kingdom shows, investing solely in research without ensuring technology is efficiently transferred to the wider economy can squander a once promising lead.

For companies the situation is less clear. Emerging technologies are a good long-term strategic bet, but a degree of maturity is required if they are to translate directly into profits. Companies that can take a ten-year view of technology will find it rewarding, but those with shorter term needs will always find it more profitable to outsource innovation to start ups.

Finally, for the investment community, it once again depends on your timescale. Anyone expecting a rash of dot com companies that can be built and sold within eighteen months will be sorely disappointed, but investors with the ability to raise follow on capital, or to be able to view technology as a longer term asset will do well. Unfortunately this excludes 95% of the venture capital industry!!!

Futurist Portrait: Lidewij Edelkoort

Li Edelkoort is one of the world’s most renowned trend forecasters.

Her work has pioneered trend forecasting as a profession; from the creation of innovative trend books and audiovisuals since the 1980s to long-ranging lifestyle analysis and research for the world’s leading brands today.

Li announces the concepts, colours and materials which will be in fashion two or more years in advance because, “there is no creation without advance knowledge, and without design, a product cannot exist.” In this way, she and her closely-knit teams orientate professionals in interpreting the evolution of society and the foreshadowing signals of consumer tastes to come, without forgetting economic reality.

Born in Holland in 1950, Li Edelkoort studied fashion and design at the School of Fine Arts in Arnhem, and upon graduation became a trend forecaster at the leading Dutch department store, De Bijenkorf. There, she discovered her talent for sensing upcoming trends and her unique ability to predict what consumers would want to buy several seasons ahead of time. This brought her to Paris in 1975, working first as an independent trend consultant and soon creating Trend Union.

Li has received continual recognition for her work in providing inspirational stimulus and fostering creative talent. In 2003, TIME magazine named her as one of the world’s 25 most influential people in fashion, while she received the Netherlands’ Grand Seigneur prize one year later for her work in fashion and textile. In 2005, Aid to Artisans honoured Li Edelkoort with a lifetime achievement award for her support of craft and design. More recently, in March 2007, she has been named Chevalier des Arts et des Lettres by the French Ministry of Culture and Communication. Li Edelkoort is famous for her lively and inspiring seminars and speeches.

Twice a year with Trend Union, she tours the world to present her fashion and interiors trends audiovisuals, starting in Paris and then visiting Stockholm, Copenhagen, London, Amsterdam, Tokyo, New York and Los Angeles.

Li also makes trend presentations upon request for companies, industry associations or institutions. With her team, Li has created trend forums and audiovisuals which have remained imprinted in the minds of the textile and design community through installations for Première Vision in the 90’s and more recently for Pitti Filati, Pratotrade and Moda Pelle.

Since she has been nominated as Chairwoman of the Design Academy Eindhoven, this already well-known institution has gained reputation with her vision on the future of education coupled with her knowledge of design and industry. Exhibitions are regularly organized in Eindhoven with the Graduation Show or during major events such as Milan’s Salone del Mobile.

Expressing her increasing involvment in art and design, Li also curates exhibitions such as Armour: the fortification of man in the Netherlands and an installation as part of Skin Tight: the sensibility of the flesh at Chicago’s Museum of Contemporary Art and New York’s Stephen Weiss Studio.

Exhibition at the Designhuis in Eindhoven, The Netherlands from 26 March – 31 May 2009. Archeology of the Future maps and analyses the most influential tendencies of the last 20 years seen through the eyes of Li Edelkoort, who has taken the lead of the Designhuis as its director since the beginning of this year. This is the first exhibition in its kind to outline and define trend forecasting as a profession and it is entirely dedicated to Lis’ oeuvre.

Agenda

Season Program 2009 / 2010: | ||

| February 11 18:30-21:15 | the future of Biosensing Location: Waag Society, Nieuwmarkt 4, 1012 CR Amsterdam  | |

| March 25 18:30-21:15 | the future of Sports Location: Amsterdam | |

| April 29 18:30-21:15 | the future of Music Location: Hogeschool van Amsterdam, Amstelcampus, Rhijnspoorplein 1, 1091 GC Amsterdam | |

| June 3 18:30-21:15 | the future of CERN Location: Amsterdam | |

Customer Reviews

Thanks for submitting your comment!