by Christine Perey

People, processes and technologies we use in business meetings are continuously changing in order to increase efficiency in the workplace, or enhance meeting productivity. How can the addition of more technology help more than it hurts? The goal of this article is to take what is currently known about meetings and to overlay a vision of the future, to see how the addition of new technologies based on advanced signal processing and information analysis can have a positive impact on meetings.

The reader will also learn about the AMI Project and explore how moving beyond the analysis of simple verbal communications – adding non-verbal communications – can reveal deeper trends and patterns. Applications using AMI technology could give people the ability:

- to prepare better for upcoming meetings,

- to review parts of meetings in progress or past meetings missed,

- to analyze behaviors and positions taken by individuals or groups, and

- to attend multiple meetings without missing critical elements in either.

At a management level, having technologies, which analyze verbal and non-verbal content and communications, could be integrated with other enterprise managements systems to:

- be the basis of meeting behavior/methods training programs, even permitting self-analysis by participants,

- improve team construction based on team members’ past meeting behaviors,

- reduce risk of disclosures and delays caused by underlying conflicts, and

- recommend strategies for human resource utilization across multiple projects and teams.

The Augmented Multimodal Interaction Project

The AMI Project, an EU-funded research project involving dozens of scientists across a fifteen-member consortium, focuses meetings in order to develop intelligent software algorithms and systems. The algorithms and related technologies under development can become core building blocks on which products and services may emerge for use by people in and between meetings.

Scientists in the AMI Project bring expertise from many disciplines. They include world-renowned experts in the fields of speech processing, video/vision processing and human-computer interfaces, as well as sociology, psychology and linguistics. The focus of their research is on the human-human communication, which occurs between people during product design meetings. The design of the research should permit expansion of the scope to include many more types of meetings and team processes.

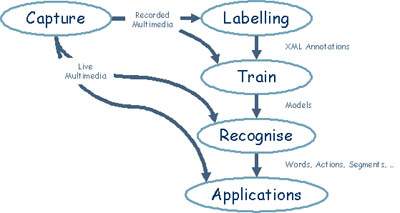

Statistical machine learning is used by the AMI Project in the context of improving our understanding of business meetings. Machine learning will produce software building blocks. The process of developing these core technologies begins by extracting information from large numbers of multimedia meeting recordings. All the information of interest is labeled. Based on the labels, models are developed (“trained”) to recognize events, words and other patterns of interest. Then, once the models are able to reliably recognize information from sources on which they were trained, a system deployed in a meeting environment can automatically recognize patterns based on new multimedia meeting data it receives.

Applications for AMI technologies in meetings

AMI technologies can add value to participants between and during meetings.

Between meetings

In the future, the knowledge worker will have the need-and using AMI research-based technologies will possess the tools-to access multimedia assets from the corporate knowledge base for work between meetings with fewer of the issues we encounter today.

Anyone can imagine situations in which the archives of past meetings (in which the searcher herself participated or meetings conducted by others) in a corporation would be useful to search for particular key attributes or content. The problem is that the key attributes are “lost” among the un-interesting meeting segments.

Meetings could be more effective if, prior to entering, each participant were better prepared. More preparation takes time; more time than rewards, if the proper tools are not available. The AMI technology can be integrated into tools that help people prepare for their meetings.

Between meetings a user of the multimedia meeting archive can:

- Review a summary of one or any number of past meetings,

- Search one or more past meetings to answer specific questions,

- Browse one or more past meetings to answer specific or general questions,

- View the entire meeting (or multiple meetings) in faster-than-real time, and

- Detect patterns exhibited by groups or individuals during past meetings, which may provide insights into the upcoming meeting.

Imagine what it would be like if knowledge workers who are unable to attend a meeting to which they would have added value or from which they could benefit had access to recordings and the functionalities above. Wouldn’t the process and reliability of “catching up” be different?

Better Summaries are Crucial

Today if a person misses a meeting, they must go to others who attended and ask for a summary. Meeting summaries used in business today are verbal, contain the biases of a participant’s point of view and are not searchable. Sometimes summaries can be in the form of notes or minutes but most meeting participants do not have the time to formalize their conclusions in a form that is useful to others in a project team.

A summary should capture the essence of the content of a meeting. There are as many summary formats as types of meetings. One can imagine options such as:

- Bullet summary,

- Paragraph summary,

- Summary in audio, and

- Summary in audio, video and with supporting media introduced during the meeting.

Regardless of their presentation media or their depth, those who rely on them need the content of summaries to be linked to the detailed contents (the multimedia record) of the meeting. In much the way one navigates a web site or any interactive application, a summary statement should be a “window” into the meeting at the particular time when an issue is discussed or a decision made. The idea of an intelligent meeting database architecture, which would be able to produce summaries of multiple meetings is also part of the AMI vision. From the summary, the user of meeting archives must also be able to search, browse and have flexible ways of accessing the contents of the meeting or multiple meetings in a database.

Searching, Browsing and Skimming Archives

When unstructured media files from a meeting archive are indexed and stored in an appropriate repository, their contents are temporally associated with structured data, consisting of other relevant information in the database (time stamps, text transcripts of speech and all written additions or information projected on the screen, names of people in a meeting, the subject of the meeting, the agenda of the meeting, and any files introduced during the meeting).

A user interface for a multimedia meeting repository provides a search function. One can imagine a dialog box in which an inquiry is entered by the user (it might be typed in using a keyboard but in the future, the user might speak or point to designate what aspects are sought). A pop-up menu might have the most frequently asked search threads.

Questions, which the AMI Project is using machine learning to answer on its database of meetings include:

- Who is in the meeting?

- What are the participants saying?

- When and how do they communicate?

- What are they doing?

- What are their emotional states?

- What are they looking at (focus of attention)?

Based on the details, higher order questions are asked, such as:

- What topics are discussed and when?

- What decisions are made and by whom?

- What roles do the participants play?

- What positions do they take on issues?

- What activities are completed?

- What tasks are assigned or reported done?

In some cases, the person using a meeting archive may not know exactly what they are looking for. This requires a different type of interaction with the archive or the repository permits “skimming” in a linear fashion as well as non-linear browsing (through text).

Accelerating Meeting Playback

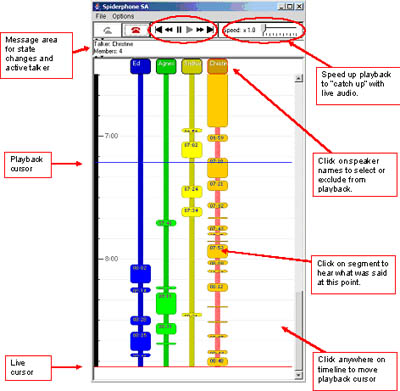

The user may also seek tools to experience the meeting in less time than it took to conduct the meeting. As illustrated in Figure 2, the user can accelerate the playback of a telephone conference by only asking to hear or “see” those sections attributable to a particular meeting participant. Or can adjust the speed of the playback of all the meeting media. This is a use of AMI technologies between meetings.

Imagine being in a meeting and suddenly needing to step out to attend to an emergency or arriving after a meeting has already begun. Prior to entering or returning to the meeting, the essence of the segment missed could be obtained and permit continuing the meeting without interruption or loss of context. Figure 2 illustrates how the user would control the playback of a meeting in progress.

Detecting Patterns

Summaries are, in some ways, the detection and compression of patterns into smaller, more accessible chunks. Patterns can come in any shape and size. They may consist of the utilization of a word or expression, a gesture or non-verbal type of communication such as nodding to indicate agreement or nodding when a person is drowsy. These are subtle differences, which the human brain can distinguish and, in time, the algorithms on which AMI is working will also be able to detect and flag or enter in the database for use by meeting applications.

In some scenarios for AMI technology use, a meeting participant’s gestures or position relative to others can be the cue, which causes a response in a virtual representation of a remote participant. For example, as illustrated in figure 3, when all the participants are in the meeting are turned towards a white board, the virtual participant is expected to turn similarly.

Detecting patterns could also help decisions in rendering agent actions (body language). If during a meeting everyone has their arms folded, would the remote participant also seek to assume this posture as well? These are other examples of how using AMI technology to detect patterns will be potentially valuable during meetings.

Support during meetings

In much the same manner as archives can be resources to people between meetings, or that AMI can help the late meeting participant get “caught up,” the recordings of past meetings should also serve as resources to participants during a meeting. Suppose participants in a meeting wish to answer a question about a previous meeting. Features similar to those accessible between meetings should be available but would also take into account the participants of the live meeting and the sensitivity of the sources or contents of past meetings.

Improving Meeting Management and Progress

There are many scenarios for improving the flow and dynamics of communication during a meeting. Since the AMI project technologies are able to measure the interactions and participation of people in a meeting, analyses could be summarized and presented to a chairperson during a meeting. Imagine a system, which compares a proposed (ideal?) agenda with the progress of an actual meeting and alerts the participants about deviations from their goals.

In some more futuristic AMI application scenarios, the directives or opinions of leaders or behaviors of participants in past meetings could be privately or publicly compared with the real time progress. The comparison could be used by the meeting chair to re-orient discussions to key issues, which are known to cause delays in a project, for example.

A meeting and the life cycle of a project can be shortened if known obstacles are anticipated and addressed. Imagine a meeting in which an action item is being taken to prepare an analysis of a risk. If, by accessing past meeting repositories, or having an agent which automatically compares new action items with the past, a knowledge worker can be notified that such an analysis already exists, the meeting chairperson can introduce the relevant conclusions and accelerate the project.

An AMI-based technology could help the moderator of the meeting follow how long the monologue has been in progress and intervene to involve more participants in a discussion. Metrics such as time spoken, the number of times a participant has successfully “grabbed” the floor, the number of people who are paying attention to a participant (regardless of their having the floor or not) all help to manage the process of a meeting or a project’s outcome more effectively. People who repeatedly grab the floor could receive automatically generated notifications that others are finding their input valuable or irritating and permit the participant to adapt behaviors in real time given the conditions.

Meeting Agents

Frequently it is necessary for the success of multiple projects for a person to be assigned responsibilities with overlapping time requirements. Another scenario for AMI technologies includes a system which helps knowledge workers “attend” two or more meetings simultaneously. The individual may participate in one meeting in person or by telephone and request to have an agent monitor one or more meetings. Provided participants in another meeting agree, the monitoring agent can be configured to detect real time events such as changes in the agenda, discussion of a particular item on the agenda which concerns the employee directly, a new person entering the meeting or someone who is known to be important leaving a meeting. This could optimize the use of limited human resources.

In the AMI demonstration of this scenario, the Remote Meeting Assistant (RMA) will detect events (e.g., keywords, entry or exit, change in dialog, debates) which it has been configured to monitor and alert the user. These could be real time alerts (via a pop up or toaster like an Instant Message) and they could be compiled for later review. Taking action based on information provided by a RMA would require first gaining the context for the alert, perhaps by way of an accelerated playback of recent remarks or discussion.

Bringing Science in contact with Business, Aligning Vision with Real World Limits

In the business of meetings, it is crucial for those in the trenches, those who are managing the technologies for enterprise meetings to expand their frameworks, vocabularies and working models about business process in order to envision more efficient workforces and accelerated, highly-informed decision making. At the same time, the research community will be working on machine learning and the development of algorithms which process multi-modal signals with ever increasing accuracy, regardless of the use for these systems in enterprise. Where possible the research and business communities can nourish one another and better the world.

It is important, regardless of the scope of ones vision or on which side of the science-business divide one stands, to understand the practical limits of technology as well as the ability for business and humans to change. Some of the applications are challenging to implement in real time products and will not be realized for decades due to the processing complexity.

Only time will tell how large an impact the AMI Project will have on people, processes and technology of the future. Without very strong incentives, humans resist changing their behaviors. Cost of technology requiring investments greater than the foreseen return may also be an obstacle. Other scenarios explored in this article are difficult to implement for reasons other than known technology limitations. The only thing we can be certain of is that in the future there will continue to be business meetings, and they can be dramatically improved using new technologies.

Transfer of AMI technology into mainstream products and services is a crucial aspect of the project. Vendors developing meeting technologies must experience the research underway in the AMI Project to assess opportunities for future features and functionality. For information on the AMI Project approach and to see demonstrations and screen movies of the technology prototypes in action, visit www.amiproject.org

About Christine Perey

Christine Perey is the principal of PEREY Research & Consulting, based in Montreux, Switzerland. Perey focuses on multimedia communications and offers technology or market-specific services such as opportunity and risk analyses, business development and strategic planning services to video and visual communications technology vendors and service providers. She is responsible for technology transfer and manages the Community of Interest for the AMI Project. She can be reached at cperey@amiproject.org

You can find the event ‘the future of Business Meetings’